An artificial neural network is a type of machine learning that takes input and recognizes patterns then makes a decision or predication about the output.

In one of my previous posts Solve General Problems with Artificial Intelligence, I discussed an approach to artificial intelligence (AI) referred to as the physical symbol system hypothesis (PSSH). The theory behind this approach is that human intelligence consists of the ability to take symbols (recognizable patterns), combine them into structures (expressions), and manipulate them using various processes to produce new expressions.

As philosopher John Searle pointed out with his Chinese room argument, this ability, in and of itself, does not constitute intelligence, because it requires no understanding of the symbols or expressions. For example, if you ask your virtual assistant (Siri, Alexa, Bixby, Cortana, etc.) a question, it searches through a list of possible responses, chooses one, and provides that as the answer. It doesn't understand the question and has no desire to answer the question or to provide the correct answer.

AI built on the PSSH is proficient at storing lots of data (memorization) and pattern-matching, but it is not so good at learning. Learning requires the ability not only to memorize but also to generalize and specify.

Human evolution has made us experts at memorization, generalization, and specification. To a large degree, it is how we learn. We record (memorize) details about our environment, experiences, thoughts, and feelings; form generalizations that enable us to respond appropriately to similar environments and experiences in the future; and then fine-tune our impressions through specification. For example, if you try to pet a dog, and it snaps at you, you may generalize to avoid dogs in the future. However, over time, you develop more nuanced thoughts about dogs—that not all dogs snap when you try to pet them and that there are certain ways to approach dogs that make them less likely to snap at you.

The combination of memorization, generalization, and specification is a valuable survival skill. It also plays a key role in enabling machine learning — providing machines with the ability to recognize unfamiliar patterns based on what they already "know" about familiar patterns.

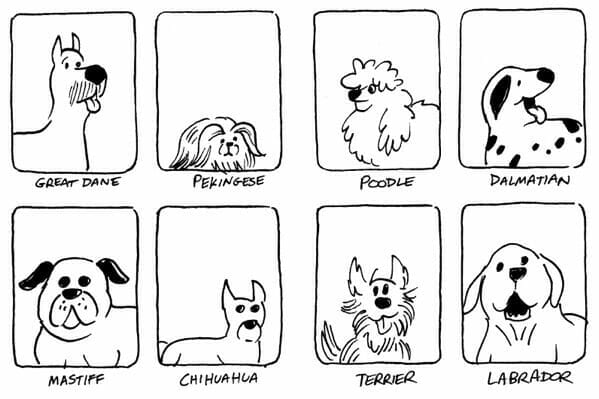

Look at the following eight images. You can tell that the eight images represent different breeds of dogs, even though these aren't photographs of actual dogs.

You know that these are all pictures of dogs, because you have encountered many dogs in your life (in person, in photos and drawings, and in videos), and you have formed in your mind an abstract idea of what a dog looks like.

In their early stages of learning, children often overgeneralize. For example, upon learning the word "dog," they call all furry creatures with four legs "dog." To these children, cats are dogs, cows are dogs, sheep are dogs, and so on. This is where specification comes into play. As children encounter different species of four-legged mammals, they begin to identify the qualities that make them distinct.

Computers are far better at memorization and far worse at generalization and specification. The computer could easily memorize the eight images of dogs, but if you fed the computer a ninth image of a dog, as shown below, it would likely struggle to match it to one of the existing images and identify it as an image of a dog. In other words, the computer would quickly master memorization and pattern-matching but struggle to learn due to its inability to generalize and specify.

For this same reason, language translation programs have always struggled with accuracy. Developers have created physical symbol systems that translate words and phrases from one language to another, but these programs never really learn the language. Instead, they function merely as an old-fashioned foreign language dictionary and phrasebook — quickly looking up words and phrases in the source language to find the matching word or phrase in the destination language and then stitching together the words and phrases to provide the translation. These translation programs often fail when they encounter unfamiliar words, phrases, and even syntax (word order).

Currently, machines have the ability to learn. The big challenge in the future of AI learning and machine learning will be to enable machines to do a better job of generalizing and specifying. Instead of merely matching memorized symbols, newer machines will create abstract models based on the patterns they observe (the patterns they are fed). These models will have the potential to help these machines learn more effectively, so they can more accurately interpret unfamiliar future input.

As machines become better at generalizing and specializing, they will achieve greater levels of intelligence. It remains to be seen, however, whether machines will ever have the capacity to develop self-awareness and self-determination — key characteristics of human intelligence.

An artificial neural network is a type of machine learning that takes input and recognizes patterns then makes a decision or predication about the output.

Overcome artificial intelligence challenges and embrace data science as a way to get value from AI and machine learning.

Supervised vs unsupervised learning models have different use cases with machine learning. The key difference is whether you're working with labeled or unlabeled data.