Well established data science team roles will help your team discover insights. The data science team structure is more than just a data scientist and data engineer.

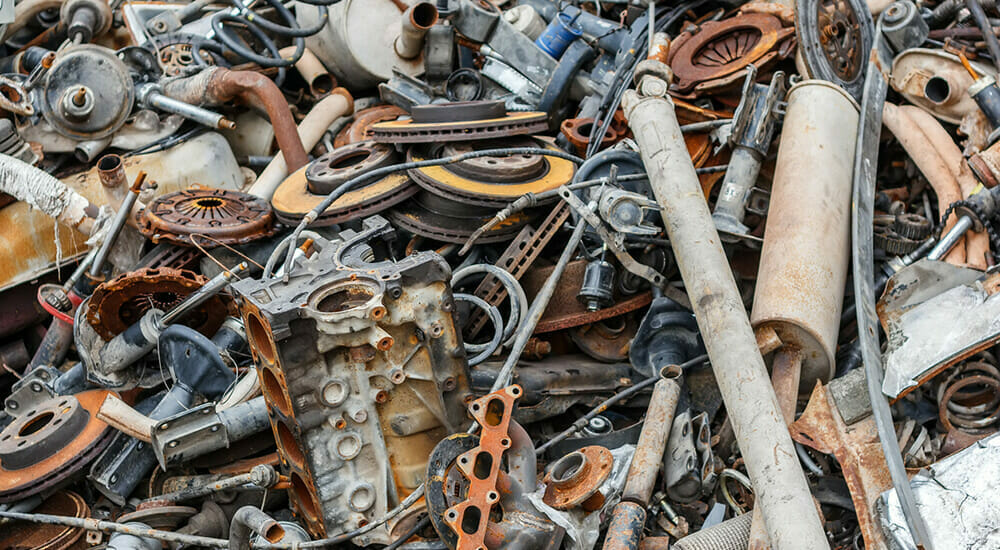

Software developers have a popular saying, “Garbage in, garbage out.” They even have an acronym for it: GIGO. What is true for computer science is true for data science, as well, and perhaps even more so — if the data you’re analyzing is false, misleading, out of date, irrelevant, or insufficient, any decision based on that data is likely to be a poor decision.

The challenge of GIGO is compounded by the fact that data volume is growing exponentially. Big data is certainly valuable, but big data is accompanied by big garbage — inaccurate or irrelevant data that can contaminate the pools of data being analyzed. Big garbage creates a lot of “noise” that can result in misleading information and projections.

In this article, I discuss various ways to prevent big data from becoming big garbage.

More isn’t necessarily better when it comes to data. While you can’t always determine ahead of time which data is relevant and which isn’t, try to be selective when choosing data sets for analysis. Focus on key data. Including excess data is likely to cloud the analytics in addition to increasing demand on potentially limited resources, such as storage and compute.

Data warehouses tend to become cluttered with old data that may no longer be relevant. Your data team must decide whether to keep all the data or delete some of it. That decision isn’t always easy. Some analysts argue that storage (especially cloud storage) is inexpensive and that you never know when a volume of historical data will come in handy, so retaining all data is the best approach. Besides, buying more storage is probably cheaper and less aggravating than engaging in tiresome data retention meetings. Others argue that large volumes of data push the limits of the data warehouse and increase the chances of irrelevant data producing misleading reports, so deleting old data makes the most sense.

The only right decision regarding whether to retain or delete old data is the one that’s best for the organization and its business intelligence needs. You can always archive old data that isn’t likely to be useful and then delete it if nobody has used it in the past couple years. Whatever you choose to do with old data, you should have a strategy in place.

Many companies collect as much data as possible out of fear that they will fail to capture data that is later deemed essential. These companies often end up with unmanageable systems in which the integrity and hence the reliability of the data suffers.

I once worked for a company that fell into this common trap. They owned a website that connected potential car buyers with automobile dealerships. They created a tagging system that recorded all activity on the website. Whenever a customer pointed at or clicked on something, opened a new page, closed a page, or acted in any other way, the site recorded the event.

The system grew into thousands of tags. Each of these tags recorded millions of transactions. Only a few people in the company understood what each tag represented. They could tell the number of times someone interacted with a tagged object, but only a few people could figure out from the tag which object it was or how it related to the person who interacted with it.

They used the same tagging system for advertisements and videos posted on the site. They wanted to connect the tag to the image and the transaction, which allowed them to see the image that the customer clicked as well as data from the tag that indicated where the image was located on the page. All of this information was stored in an expanding Hadoop cluster. Unfortunately, the advertisements constantly changed, and the people in charge of tagging items started renaming the tags, so the integrity of the data being captured suffered.

The problem wasn’t that the organization was capturing too little or too much data but that it had no system in place for organizing and understanding that data.

To prevent big data from becoming big garbage, the most important precaution is to be sure that the data team is making conscious decisions and acting with intent. You don’t want a data policy that changes every few months. Decide in advance what you want to capture and save — what data is essential for the organization to achieve its business intelligence goals. Work with the team to make sure everyone agrees with and understands the policy. If you don’t have a set policy, you may corrupt all the data or just enough of it to destroy its integrity, and unreliable data can be worse than having no data at all.

Well established data science team roles will help your team discover insights. The data science team structure is more than just a data scientist and data engineer.

A data question board can help your data science team have more meaningful discussions. Move beyond simple data analysis to gain real insights.

Data science is a multi-disciplinary approach to extracting insight from data. The disciplines involved include computer science/information technology, math/statistics, and domain knowledge/expertise (for example, knowledge of a specific industry). The process of extracting insight from data is typically broken down into the following five stages: Shifting the Focus from Data to Science The best way […]