The neural network chain rule works with backpropagation to help calculate the cost of the error in the gradient descent.

In one of my previous articles, Perceptron History, I described an artificial neuron that produces a linear/binary output. The perceptron can distinguish between only two classes.

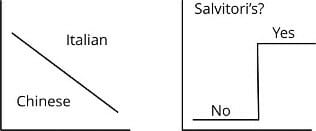

For example, if I provided the perceptron with a description of two restaurants (Italian and Chinese), and asked which I should choose to eat at, it would, at best, be able to provide a thumbs up or thumbs down to help me distinguish between two.

If you were to graph the output of the perceptron, it would look like this:

What I’d really like is a sliding scale that gives me some indication of the relative quality of the restaurant, maybe a ranking from 0 to 5. The closer I am to five, the more confident the network is in the quality of the restaurant. If it’s close to zero then I should probably go somewhere else. For this purpose, I might try to use something different — a sigmoid neuron.

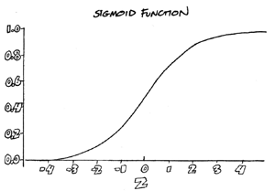

A sigmoid neuron can handle far more variation in values than the binary choices you get with a perceptron. In fact, you can put any two numbers you want in a sigmoid neuron and it will squeeze a sigmoid curve into that range. It’s called a sigmoid neuron because its function’s output forms an S-shaped curve when plotted on a graph; an S is almost like a line that’s been squeezed into a smaller space.

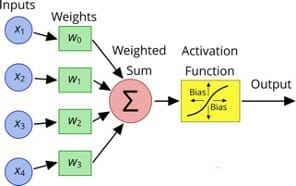

The sigmoid neuron works like a perceptron, using weighted inputs. The key difference is the activation function. Instead of using a linear or stair-step function that results in binary classification (0 or 1), a sigmoid neuron uses the sigmoid function to deliver an infinite range of values between the specified upper and lower values.

If I were to use the sigmoid neuron to help me decide whether to eat at a certain restaurant, it would provide me with a clearer indication of the restaurant's quality. Instead of giving a thumbs up or thumbs down, it provides a value within a range of values, which indicates the probability of the restaurant being a good choice.

The sigmoid neuron is a key to deep learning — a subset of machine learning in which the neural network is capable of learning unsupervised from data that is unstructured or unlabeled. Using a learning algorithm, you can start by assigning random values to the weights and bias. When you feed in the data inputs, the neural network calculates the predicted outcome and computes the overall loss (squared error loss) of the model.

Based on the overall loss, the neural network adjusts the weights and biases and repeats its calculations. It continues this process until the overall loss is as close to zero as possible, meaning that the output is as accurate as possible.

The complexity of the mathematics involved in learning functions is beyond the scope of a general article, such as this one. The key takeaways here is that the sigmoid neuron takes the perceptron to the next level, enabling it to output a range of values between the specified upper and lower limits and that it enables a neural network to learn unsupervised — without having to be trained with a set of structured, labeled data.

The neural network chain rule works with backpropagation to help calculate the cost of the error in the gradient descent.

The artificial neural network cost function helps machine learning system learn from their mistakes.

Artificial neural network regression and classification problems are usually solved using supervised machine learning.